There are a number of ways to improve performance of the reports, and one of the mechanism is using query results caching during off hours by running queries and hence, causing the server to cache their results.

If data is refreshed multiple times during the day, and BI cache is purged after data refresh, then the Analysis/reports queries need to run for BI cache seeding after every time the data is refreshed.

This can be achieved by scheduling agents/iBots with large Analysis/reports to run within a few (ex. 20 min) minutes after the data refresh by following the steps below.

1.Create repository variable LAST_UPDATE_DATE with init block sql as

Select max(dw_updated) from SALES_FACT

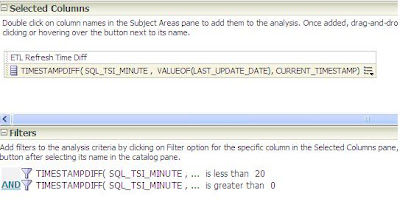

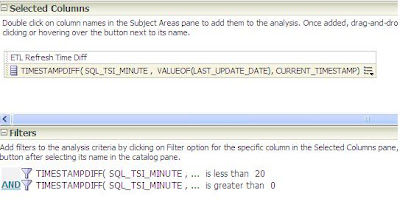

2.Create an Analysis ( to be used in condition in agents/iBots) with column “ETL Refresh Diff Time” with formula

TIMESTAMPDIFF ( SQL_TSI_MINUTE, VALUEOF(“LAST_UPDATE_DATE”)), CURRENT_TIMESTAMP)

with the filter as follows

TIMESTAMPDIFF ( SQL_TSI_MINUTE, VALUEOF(“LAST_UPDATE_DATE”)), CURRENT_TIMESTAMP) is less than 20

and TIMESTAMPDIFF( SQL_TSI_MINUTE, VALUEOF(“LAST_UPDATE_DATE”)), CURRENT_TIMESTAMP) is greater than 0

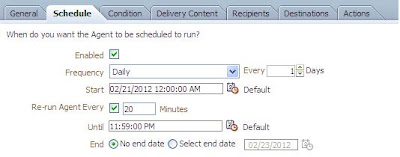

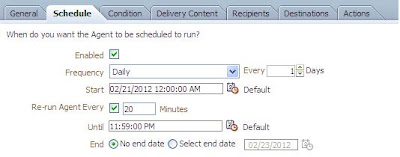

3. Create an Agent/iBot scheduled to run every 20 min.

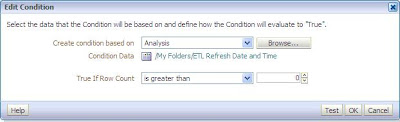

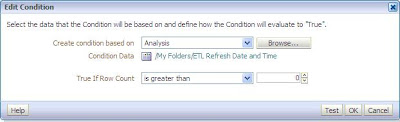

4.Use an analysis created in step 2 in the condition as shown below.

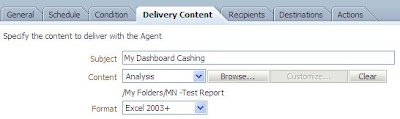

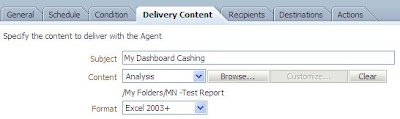

5. In the Delivery Content tab, select the report ( MN-Test Report) to seed the BI cache.

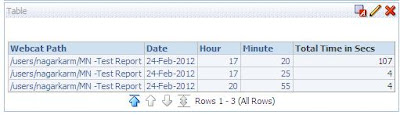

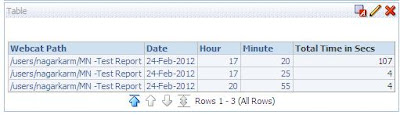

6. Add recipients and destination as Oracle BI Server Cache (For seeding cache) and save the agent/iBot. 7. When the Condition returns TRUE, the Agent/iBot run Analysis and seed the BI server cache. In this example, total time for displaying the data after data refresh was 107 sec. After subsequent runs, the analysis returned data in 4 sec as shown below from usage tracking data.

The performance is improved by a factor of more than 26. After testing this technique for multiple reports, the performance of large reports improved.

If data is refreshed multiple times during the day, and BI cache is purged after data refresh, then the Analysis/reports queries need to run for BI cache seeding after every time the data is refreshed.

This can be achieved by scheduling agents/iBots with large Analysis/reports to run within a few (ex. 20 min) minutes after the data refresh by following the steps below.

1.Create repository variable LAST_UPDATE_DATE with init block sql as

Select max(dw_updated) from SALES_FACT

2.Create an Analysis ( to be used in condition in agents/iBots) with column “ETL Refresh Diff Time” with formula

TIMESTAMPDIFF ( SQL_TSI_MINUTE, VALUEOF(“LAST_UPDATE_DATE”)), CURRENT_TIMESTAMP)

with the filter as follows

TIMESTAMPDIFF ( SQL_TSI_MINUTE, VALUEOF(“LAST_UPDATE_DATE”)), CURRENT_TIMESTAMP) is less than 20

and TIMESTAMPDIFF( SQL_TSI_MINUTE, VALUEOF(“LAST_UPDATE_DATE”)), CURRENT_TIMESTAMP) is greater than 0

3. Create an Agent/iBot scheduled to run every 20 min.

4.Use an analysis created in step 2 in the condition as shown below.

5. In the Delivery Content tab, select the report ( MN-Test Report) to seed the BI cache.

The performance is improved by a factor of more than 26. After testing this technique for multiple reports, the performance of large reports improved.

No comments:

Post a Comment